Style transfer involves combining the style of one image with the content of another to form a new image. Unlike traditional two-dimensional images which only capture the spatial intensity of light rays, four-dimensional light fields also capture the angular direction of the light rays. Thus, applying style transfer to a light field requires to not only render convincing style transfer for each view, but also to preserve its angular structure. We present a novel optimization-based method for light field style transfer which iteratively propagates the style transfer from the centre view towards the outer views while enforcing local angular consistency. For this purpose, a new initialisation method and angular loss function is proposed for the optimization process. In addition, since style transfer for light field is an emerging topic, no clear evaluation procedure is available. Thus, we investigate the use of a recently proposed metric designed to evaluate light field angular consistency, as well as a proposed variant.

The code will soon be available.

We show in videos below (click on an image to start a video) all the views for the original and stylized light fields. Videos were created by firs scanning the views using a horizontal snake pattern going from the top left to the bottom right view, followed by a vertical snake pattern going from the bottom right to the top left view. Note that the video baseline results were obtained using the horizontal snake pattern going from the top left to the bottom right view, thus while the first half of the videos may appear consistent, the second half of the videos reveal that the angular consistency is not fully preserved.

| Original | Image baseline [1] | Video baseline [2] | Hart et al. [3] | Ours | |

| Swans [4] |  |

|

|

|

|

| Bikes [5] |  |

|

|

|

|

| Table [6] |  |

|

|

|

|

| Herbs [6] |  |

|

|

n/a |  |

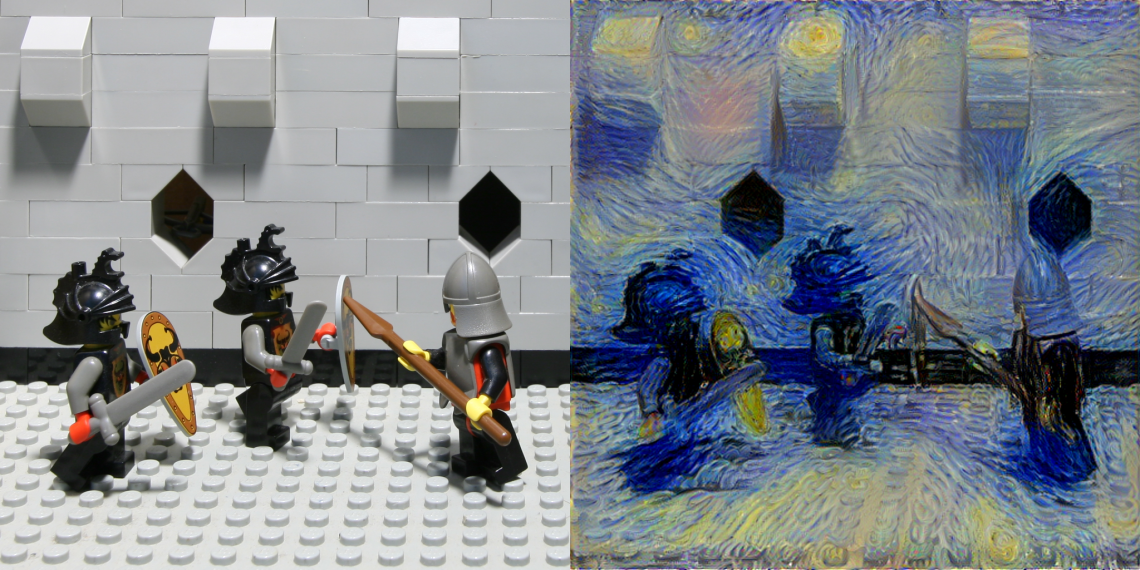

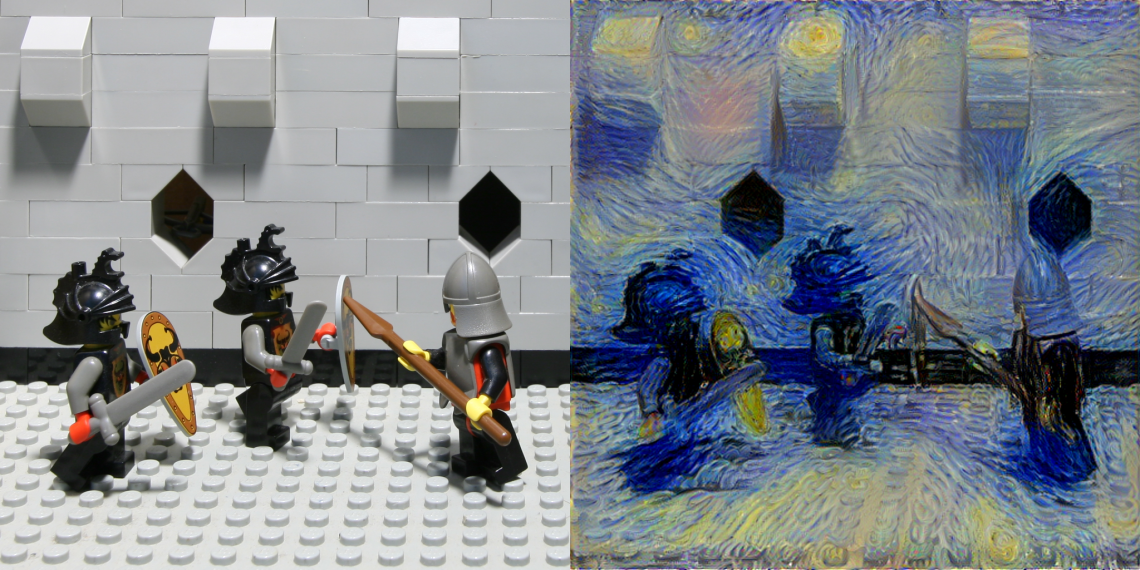

| Lego Knights [7] |  |

|

|

|

|

| Crystal Ball [7] |  |

|

|

|

|

We show in videos below (click on an image to start a video) focal stacks generated from the original and stylized light fields. Refocused images were generated using the shift-and-sum algorithm with a full aperture. Videos are showing the focal stacks from back to front and front to back.

| Original | Image baseline [1] | Video baseline [2] | Hart et al. [3] | Ours | |

| Swans [4] |  |

|

|

|

|

| Bikes [5] |  |

|

|

|

|

| Table [6] |  |

|

|

|

|

| Herbs [6] |  |

|

|

n/a |  |

| Lego Knights [7] |  |

|

|

|

|

| Crystal Ball [7] |  |

|

|

|

|

[1] Gatys, Leon A., Alexander S. Ecker, and Matthias Bethge. “Image style transfer using convolutional neural networks.” Proceedings of the IEEE conference on computer vision and pattern recognition. 2016.

[2] Ruder, Manuel, Alexey Dosovitskiy, and Thomas Brox. “Artistic style transfer for videos.” German Conference on Pattern Recognition. Springer, Cham, 2016.

[3] Hart, David, Bryan Morse, and Jessica Greenland. “Style Transfer for Light Field Photography.” The IEEE Winter Conference on Applications of Computer Vision. 2020.

[4] Rerabek, Martin, and Touradj Ebrahimi. “New light field image dataset.” 8th International Conference on Quality of Multimedia Experience (QoMEX). 2016.

[5] Abhilash Sunder Raj, Michael Lowney, Raj Shah, and Gordon Wetzstein, “Stanford Lytro light field archive”, http://lightfields.stanford.edu/LF2016.html

[6] Honauer, Katrin, et al. “A dataset and evaluation methodology for depth estimation on 4d light fields.” Asian Conference on Computer Vision. Springer, Cham, 2016.

[7] “The Stanford light field archive”, http://lightfield.stanford.edu/lfs.html